'AI' just means LLMs now

There used to be many possible candidates for how humans might build intelligent systems. Now there's only one.

This article is the first part of Superintelligence, a series intended to work out how to develop smarter-than-human AI systems from first principles.

Since the industrial revolution, humans have dreamed of building intelligent machines.

In the 1800s, Charles Babbage and Ada Lovelace built the first general-purpose computer and designed the first ever computer algorithm. They also speculated about whether computers might be creative one day and compose their own music or art.

Fast forward to the twentieth century and lots of people started thinking about what it could look like to build intelligence artificially. The most famous early such thinker is Alan Turing, the father of the Turing Test and pioneer of early logic.

Even as AI spent several decades as a marginalized topic for scientific study, the idea of AI as science fiction blossomed. It’s now played a central role in movies (2001: A Space Odyssey, Blade Runner, The Terminator, The Matrix, Ex Machina) and TV (Star Trek, Knight Rider, The Twilight Zone, Westworld, Black Mirror). It’s safe to assume that anyone who’s watched Western media over the last fifty years knows what AI is.

The Form of Superintelligence

And through science fiction we’ve seen AI take many forms. I don’t think there’s a definition of exactly what an AI should look like. Perhaps in defining AI we can take a cue from the 1964 U.S. Supreme Court: “we’ll know it when we see it.”

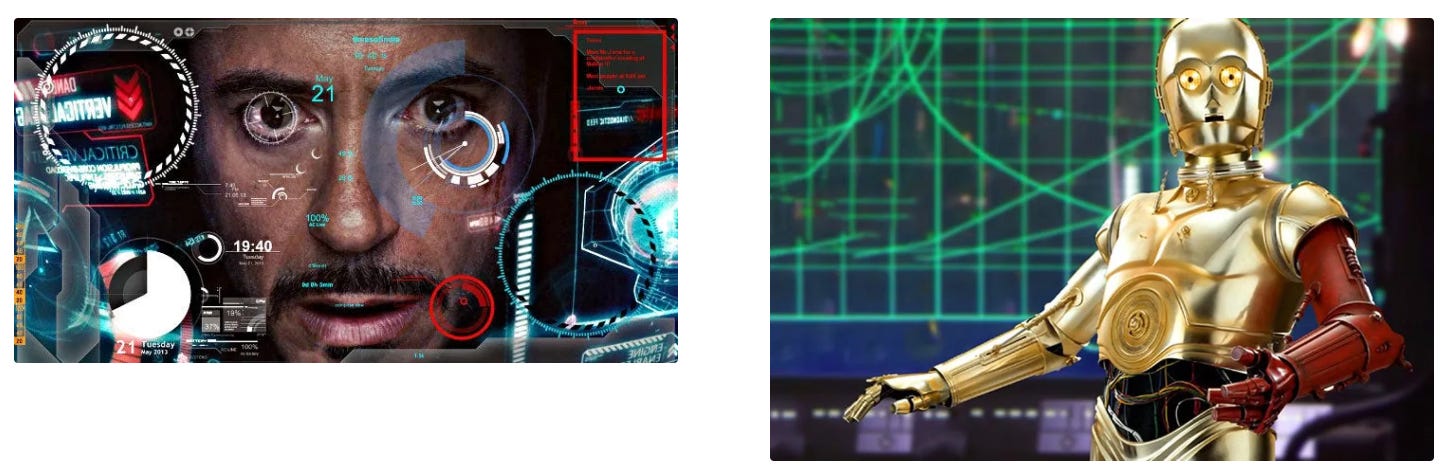

Things get even murkier when we consider superintelligence. Are all these AIs superintelligent–smarter than humans? C-3PO certainly was; that guy knew everything. But many media portrayals of AI consider them as human-level, but not smarter.

For the purpose of this series of posts, I’m going to define ‘superintelligence’ as whatever we saw in Iron Man (Jarvis) and Star Wars (C-3PO):

So we’ll take a reductionary position that superintelligence is simply a helpful machine that’s as capable and more knowledgeable than a human.

Do we have this yet? Unfortunately no. The newest, smartest models from OpenAI are very good– they can code like professional programmers and achieved gold medal on the International Math Olympiad– but they’re not considered yet human-level in many areas. (This skill profile is what some have described as jagged intelligence.)

Biological Candidates for Superintelligence

For a long time we didn’t know how to build JARVIS. We had no idea what superintelligence would look like. Many saw that some kind of connectionism might be the path, but didn’t know how to scale it.

In fact, lots of early AI research was inspired by the human brain. The most popular arguments, such as Nick Bostrom’s original arguments for superintelligence, all consider the fact that we already have proof that it’s possible to build (non-super) intelligence, if you consider the human brain as an existence proof. So you can make arguments like this one:

The human brain contains about 10^11 neurons. Each neuron has about 5*10^3 synapses, and signals are transmitted along these synapses at an average frequency of about 10^2 Hz. Each signal contains, say, 5 bits. This equals 10^17 ops. (…)

and its ultimate conclusion:

Depending on degree of optimization assumed, human-level intelligence probably requires between 10^14 and 10^17 ops.

Although I deeply admire the author’s conviction (and prescience, since these beliefs were published in 1998) it seems flawed to equate the operations performed by a human brain with simple ‘ops’ (such as floating-point operations) that happen inside a computer. (I think this is the basic point that Roger Penrose made when he hypothesized that quantum mechanics plays an important role in the development of human consciousness inside the brain.)

This is all worsened by the fact that the systems that ended up getting us closest to superintelligence are called neural networks, which is simultaneously an excellent name and a dreadful misnomer. They’re systems of interconnected dynamically-updatable components, but they’re also not related to biological neurons in any way.

Anyway, we spent a long time making these types of biological analogies, and they didn’t get us far. What worked was at best very loosely inspired by the functionality of the human brain, machine learning and neural networks:

Digital Candidates for Superintelligence

Since 2011 (around when AlexNet was introduced) every year has gotten us closer to building Jarvis or C-3PO. We developed better machine learning and neural networks, got good at training them to mimic human text, and now we’re making steady progress on incentivizing them to grow smarter than humans in some areas. This is really exceptional progress.

As mentioned, the best systems that we have right now are already very useful. They know how to code, give directions, and write recipes. They’re decent therapists and life coaches. They’re coming around in the creativity department, too, and writing better prose and poetry each year.

One might expect, then, that we as a field have several candidate systems for superintelligent AI. Perhaps we have a really good video simulator, a few companies have embodied continually-learning robots, and there’s a speech system out there that also exhibits Jarvis-like capabilities.

But this turns out not to be the case. There is only one existing technology that’s close to Jarvis: large language models. We haven’t built models that get smarter by exploring the world or watching lots of movies. We’ve only built models that get smarter by reading lots of text (say, all the words on the Internet) and then marginally smarter after doing a few thousand math problems.

All we have is language models.

Hi Jack!

Enjoyed your appearance on my favorite podcast, ODD LOTS!!!

I wanted to ask you about a couple things here-

My understanding is that AI is comprised of many things, including ML, DL, NLP, LLMs (I understand some of those are subsets of others).

LLMs clearly have caught the public’s imagination more than, let’s say, ML methods for early detection of breast cancer…

But my (admittedly much less educated) take is that all of ML and DL is important.

I also was disappointed the more I tested LLMs for things like novel idea generation (in my case as a comedian, I was trying to get it to help me write jokes, and as a digital marketer, to come up with marketing ideas).

Because LLMs act as a normalization machine based on the relationship between things as they are, creativity seems to be more difficult for them. They’re more likely to give you the same “creative” things, that their training told you were creative. Or tired old dad jokes.

I did a tiny bit of research toward discovering how relationships are scored, and if there were a way to do the inverse, which might get us closer to a bisociation type of creativity. Or discover what the 30, 35, or 90 degree angle from a relationship might be. I’ve actually prompted LLMs with polar ideas and asked them for 90-degree “left hand turn” ideas, with some success. But I know it’s not coming from a quantifed place.

I was excited about agents in the sense of multi-agent, LLM-coordinated tools that would also use python, RAG, whatever… and I am currently most impressed by perplexity’s eagerness to write and run python code to answer my questions.

In any case, that’s why I think the evolution of AI is limited by the nature of LLM, and perhaps transformers and matrices more specifically.

Where do you see all this going more at that level of detail?

Thanks!

All we have is language! Language as the OS for collective human ambition. Enjoyed reading your post, thanks.